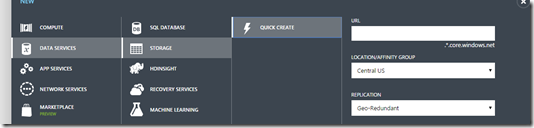

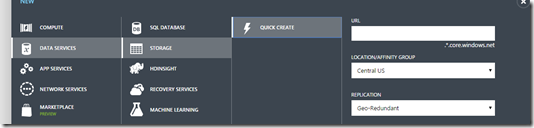

To setup a share in Azure Files straight forward, using a few lines of PowerShell makes it easy.

Assuming you applied to the Azure Files Preview program and received the notification email by the azure team that Azure Files is available for your subscription.

Azure Files will be available for each storage account you create after Azure Files became available.

After you’ve created a new storage account, Azure Files is configured and pops up in the service list in the storage dashboard in the Azure portal.

Create a share in PowerShell

It needs two steps to create a share in PowerShell. First you need to setup a storage account credentials object. Then take the credentials object and the name of the share to call the New-AzureStorageShare cmdlet.

param (

[string]$StorageAccount="",

[string]$StorageKey="",

[string]$ShareName=""

)

$ctx = New-AzureStorageContext -StorageAccountName $StorageAccount -StorageAccountKey $StorageKey

$share = New-AzureStorageShare $shareName -Context $ctx

To run the script above you need your storage account, the access key, you can find in the keys section of the storage dashboard in the Azure portal, and the name of the share you want to create.

If that’s done your Azure files share is generally available. The easiest way to access the share is to run a net use command from the command line, like:

net use z: \\<account name>.file.core.windows.net\<share name> /u:<account name> <account key>

The command above maps the UNC path \\<account name>.file.core.windows.net\<share name> to a drive named z:. File and directories will be available from this moment on by z:\<share name>

Unfortunately, it’s not possible to access files like this in IIS. Instead, you will receive errors like Authentication failure, Web.Config not accessible or simply a page not found error.

Also, you can’t configure ACLs for the share, what makes it difficult to allow the app pool identity to access the shared directories.

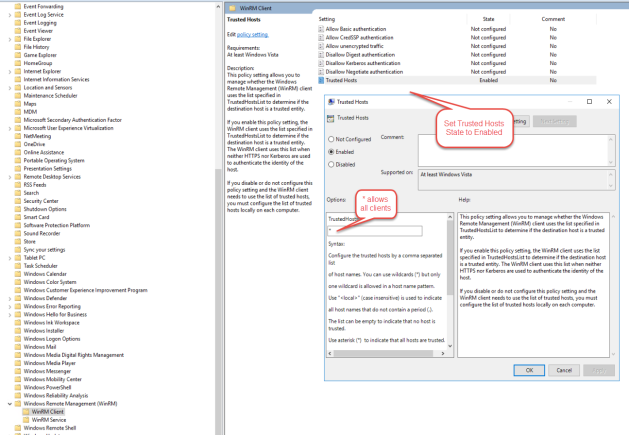

How to prepare the IIS application pool

Let’s say we have a web application that first needs to create images on the share. Also clients should be able to request those images by http.

1) First create a new local system user using the computer management snap-in. Assign storage account name and storage key as password to the new user.

2) Now make the new user the app pool identity of the application pool in IIS.

3) Setup a virtual directory that points to the UNC path of the shared directory.

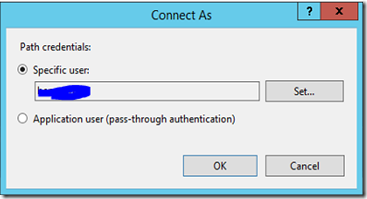

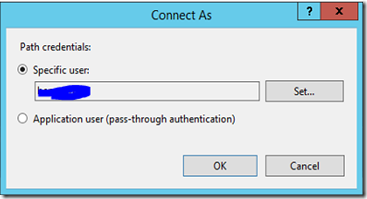

4) Open the Edit Virtual Directory dialog (select Basic Settings… in right pane), click on Connect as.. and select the new user to connect.

5) In the Connect as… dialog configure the specific user and take the same user credentials as for application pool. (I tried the Application user (pass-though authentication) option, but was unlucky to access the files in the browser).

Now, you should be able to read/write files to the share from the application and browser clients should also be able to stream resources from the share.